Implementing high availability (HA) at the VMware layer is great. Why would you need anything else? Well, as useful as the solution is — and it does help to protect against some types of failures — VMware HA alone simply doesn’t cover all the bases.

According to Gartner Research, most unplanned outages are caused by application failure (40 percent of outages) or admin error (40 percent). Hardware, network, power, or environmental problems cause the rest (20 percent total). VMware HA focuses on protection against hardware failures, but a good application-clustering solution picks up the slack in other areas. Here are a few things to consider when architecting the proper HA strategy for your VMware environment.

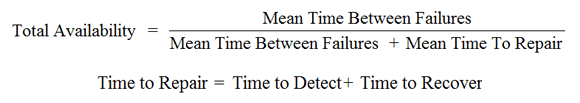

Shorten outages with application-level monitoring and clustering. What about recovery speed? In a perfect world, there would be no failures, outages or downtime. But if an unplanned outage does occur, the next best thing is to get up and running and again — fast. This equation represents the total availability of your environment:

As you can see, detection time is a crucial piece of the equation. Here’s another place where VMware HA alone doesn’t quite cut it. VMware HA treats each virtual machine (VM) as a “black box” and has no real visibility into the health or status of the applications that are running inside. The VM and OS running inside might be just fine, but the application could be stopped, hung, or misconfigured, resulting in an outage for users.

Even when a host server failure is the issue, you must wait for VMware HA to restart the affected VMs on another host in the VMware cluster. That means that applications running on those VMs are down until 1) the outage is detected, 2) the OS boots fully on the new host system, 3) the applications restart, and 4) users reconnect to the apps.

By clustering at the application layer between multiple VMs, you are not only protected against application-level outages, you also shorten your outage-recovery time. The application can simply be restarted on a standby VM, which is already booted up and waiting to take over. To maximize availability, the VMs involved should live on different physical servers — or even better, separate VMware HA clusters or even separate datacenters!

Eliminate storage as a potential single point of failure (SPOF). Traditional clustering solutions, including VMware HA, require shared storage and typically protect applications or services only within a single data center. Technically, the shared-storage device represents an SPOF in your architecture. If you lose access to the back-end storage, your cluster and applications are down for the count. The goal of any HA solution is to increase overall availability by eliminating as many potential SPOFs as possible.

So how can you augment a native VMware HA cluster to provide greater levels of availability? To protect your entire stack, from hardware to applications, start with VMware HA. Next, you need a way to monitor and protect the applications. Clustering at the application level (i.e., within the VM) is the natural choice. Be sure to choose a clustering solution that supports host-based data replication (i.e., a shared-nothing configuration) so that you don’t need to go through the expense and complexity of setting up SAN-based replication. SAN replication solutions also typically lock you into a single storage vendor. On top of that, to cluster VMs by using shared storage, you generally need to enable Raw Device Mapping (RDM), which means that you lose access to many powerful VMware functions, such as vMotion.

Going with a shared-nothing cluster configuration eliminates the storage tier as an SPOF and at the same time allows you to use vMotion to migrate your VMs between physical hosts – it’s a win/win. A shared-nothing cluster is also an excellent solution for disaster recovery because the standby VM can reside at a different data center.

Cover all the bases. Application-failover clustering, layered over VMware HA, offers the best of both worlds. You can enjoy built-in hardware protection and application awareness, greater flexibility and scalability, and faster recovery times. Even better, the solution doesn’t need to break the bank.